Projects

-

KUber - Knowledge Delivery System for Machine Learning at Scale

PI: Dr. Ahmed M. A. Sayed

Funding: EPSRC

Collaborators: Nokia Bell Labs, Samsung AI, and IBM Research

Assignees: Kevin Li, Herman Tam

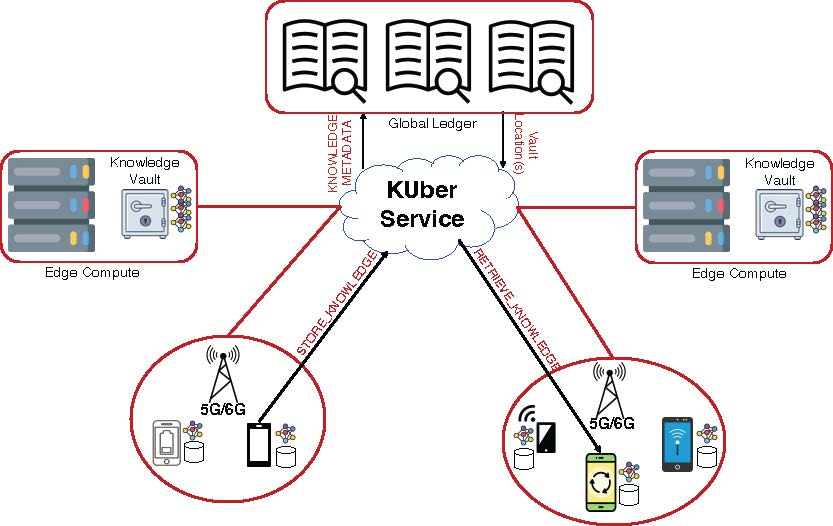

AI/ML systems are becoming an integral part of user products and applications as well as the main revenue driver for most organizations. This resulted in shifting the focus toward the Edge AI paradigm as edge devices possess the data necessary for training the models. Main Edge AI approaches either coordinate the training rounds and exchange model updates via a central server (i.e., Federated Learning), split the model training task between edge devices and a server (i.e., split Learning), or coordinate the model exchange among the edge devices via gossip protocols (i.e., decentralized training). Due to the highly heterogeneous learners, configurations, environment as well as significant synchronization challenges, these approaches are ill-suited for distributed edge learning at scale. They fail to scale with a large number of learners and produce models with low qualities at prolonged training times. It is imperative for modern applications to rely on a system providing timely and accurate models. This project addresses this gap by proposing a ground-up transformation to decentralized learning methods. Similar to Uber's delivery services, the goal of KUber is to build a novel distributed architecture to facilitate the exchange and delivery of acquired knowledge among the learning entities. In particular, we seize an opportunity to decouple the training task of a common model from the sharing task of learned knowledge. This is made possible by the advances in the AI/ML accelerators embedded in edge devices and the high-throughput and low-latency 5G/6G technologies. KUber will revolutionize the use of AI/ML methods in daily-life applications and open the door for flexible, scalable, and efficient collaborative learning between users, organizations, and governments.

-

Advancing Large Audio Models via Accelerated Deep Learning

PI: Dr. Ahmed M. A. Sayed

Funding: EPSRC AIM CDT

Collaborators: Tencent AI

Assignees: Bradly Aldous

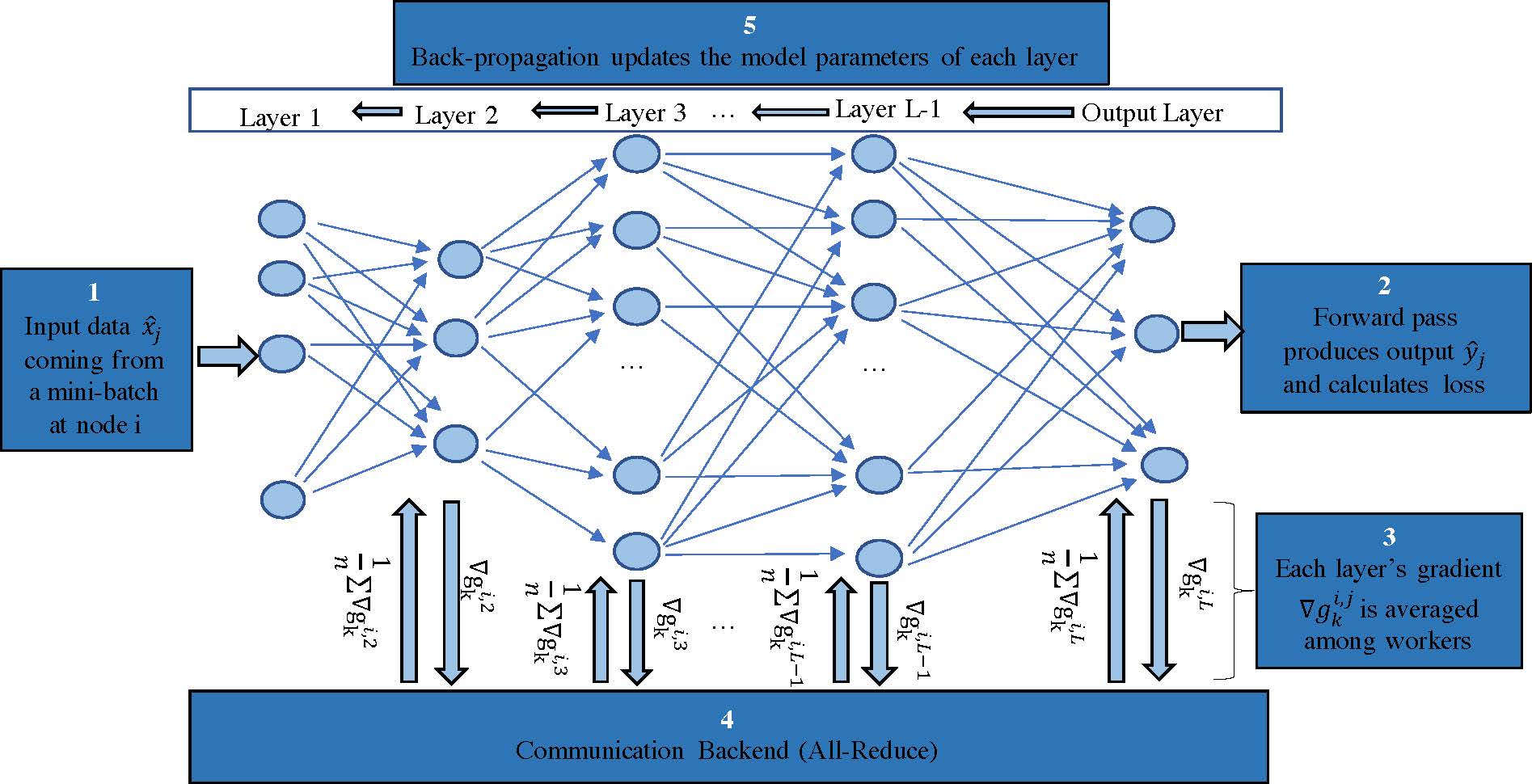

The capability of generating audio in real-time from large-scale audio data is becoming a key topic in generative art. Artificial Intelligence (AI) has been widely used to generate images which recently is also widely used to generate audio through deep learning techniques. Audio generation is to use computers utilizing Deep Neural Network (DNN) architectures to automatically generate audio. Unfortunately, to cope with the growth in audio data, the DNN models also have grown in parameters from multi-millions (e.g., RNN and LSTMs) to multi-billions (e.g., GPT-3). Consequently, the time, computational costs and carbon footprint required to train and deploy DNN models for audio generation have ever exploded. The PhD project aims to investigate, propose and implement novel optimizations on the algorithmic and system levels to accelerate the training and inference of DDN models for audio generation in HPC or Cloud environments. This involves solving a hard optimization problem with multi-objectives involving time, computation and energy efficiency. Based on these optimizations, we will develop software framework(s) that can accelerate Audio Generation tasks. This will help advance the field of audio generation by making it more sustainable and affordable.

-

Energy Efficiency in Battery-Powered Federated Learning

PI: Dr. Ahmed M. A. Sayed

Funding: Self-funded

Collaborators: None

Assignees: Amna Arouj

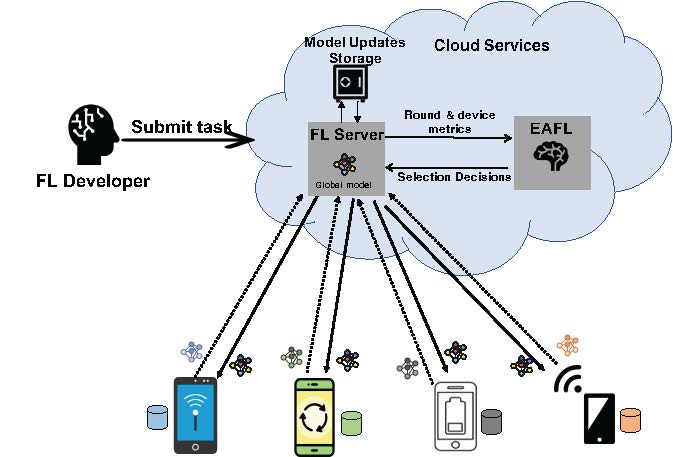

Federated learning (FL) is a newly emerged branch of AI that facilitates edge devices to collaboratively train a global machine learning model without centralizing data and with privacy by default. However, despite the remarkable advancement, this paradigm comes with various challenges. Specifically, in large-scale deployments, client heterogeneity is the norm which impacts training quality such as accuracy, fairness, and time. Moreover, energy consumption across these battery-constrained devices is largely unexplored and a limitation for the wide adoption of FL. To address this issue, we develop EAFL, an energy-aware FL selection method that considers energy consumption to maximize the participation of heterogeneous target devices. EAFL is a power-aware training algorithm that cherry-picks clients with a higher battery level in conjunction with its ability to maximize system efficiency. Our design jointly minimizes the time-to-accuracy and maximizes the remaining on-device battery levels. EAFL improves the testing model accuracy by up to 85% and decreases the drop-out of clients by up to 2.45X

-

Leveraging Curriculum Learning for Efficient and Robust Federated Learning

PI: Dr. Ahmed M. A. Sayed

Funding: Self-funded

Collaborators: None

Assignees: Kevin Li, Pantelis Papageorgiou

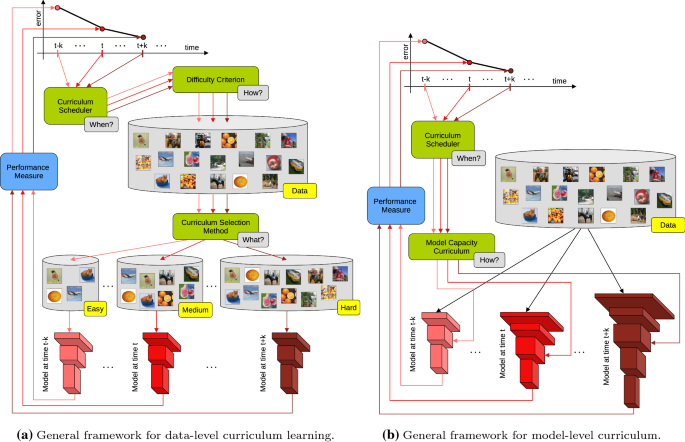

In FL scenarios we usually assume that all clients are going to be honest with no bad intentions. However, in real world cases, it is possible that some clients are going to harm the FL process. In this project, we are going to investigate the impact of malicious clients on the FL process and how to mitigate the impact of malicious clients. We introduce two attack types including Untargeted attacks (Random perturbation of their labels) and Targeted attacks (e.g. label each 3 as 8 on MNIST dataset). We also introduce a new defense mechanism called Curriculum Learning (CL) that is based on the idea of learning from easy to hard examples. We show that CL can be used to mitigate the impact of malicious clients on the FL process. Ideally, we want to detect these malicious clients in advance and not aggregate their updated weights over the rounds they participate in The more accurate the prediction of the malicious clients is, the better the final model will be (in terms of f1-score, top1 and top5 accurracy, etc)

-

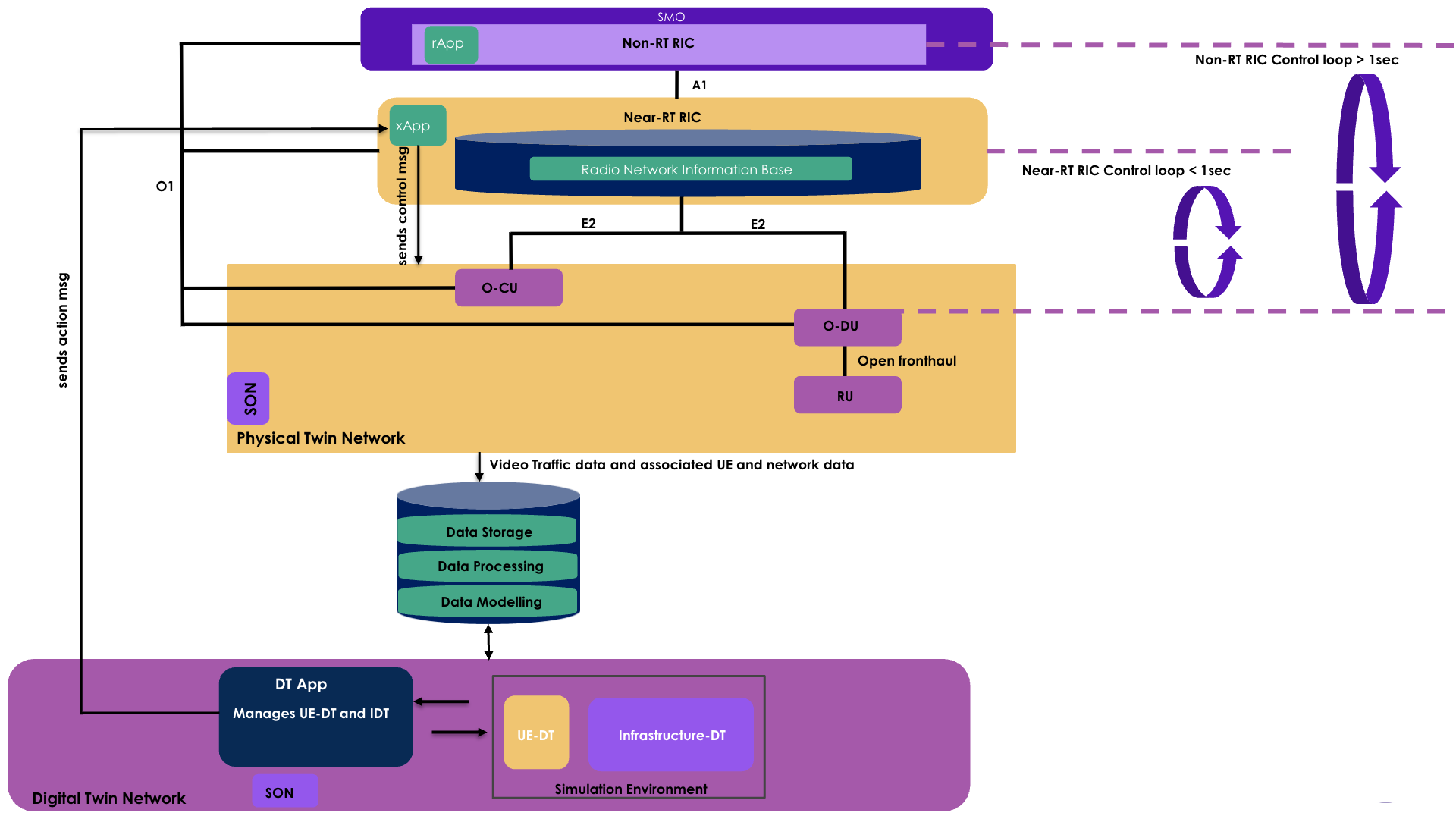

Data-Driven Digital Twin Architecture for Video Streaming Degradation Detection

PI: Dr. Ahmed M. A. Sayed

Funding: EPSRC DCE CDT

Collaborators: None

Assignees: Yemisi Oyeleke

There has been tremendous success in predicting degradation in video streaming quality using network QoS metrics. And with the advent of SON, mitigating against it in real-time is a possibility. But SON requires robust tests and an open interface to overcome its conflict-prone functions and proprietary interface challenges. The use of a SON-enabled digital twin as a virtual replica of a SON-enabled network where O-RAN is chosen as the choice RAN type can help solve the above challenges. The digital twin will act as a robust test platform on which degradation detection and mitigation of video streaming is achieved using machine learning and SON capabilities.

-

Moderation in Decentralised Social Networks (DSNmod)

PI: Dr. Ignacio Castro

Funding: UKRI-EPSRC (REPHRAIN Centre)

Collaborators: Dr. Ignacio Castro (QMUL, UK) and Dr. Gareth Tayson (HKUST GZ), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: Vibhor Agarwal

DSNmod project which received UKRI-EPSRC funding of 81K GBP from the National Research Centre on Privacy, Harm Reduction, and Adversarial Influence Online (REPHRAIN). Repherien is an EPSRC-funded research center that forms part of the broader UKRI investment focusing on protecting citizens online. Dr. Ahmed co-investigates the project with Dr. Ignacio Castro (QMUL) and Dr. Gareth Tayson (HKUST GZ) and he is investigating the development of the federated learning system, which will train the models necessary for the real-time content moderation of large-scale social networks.

-

Machine Learning Architecture for Task-based Information Transfer

PI: Dr. Marco Canini

Funding: KAUST-CRF

Collaborators: Dr. Marco Canini (KAUST, KSA) and Dr. Marco Cheise (KTH, Sweden), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: Mohammed Aljahdali

In this project which is funded by KAUST's Competitive Research Fund (CRF) grant of 400K USD, Dr. Ahmed contributed the project idea and proposal and co-investigates the project in collaboration with the PI at KAUST (Saudi Arabia) and another Co-I at KTH (Sweden). This project seeks the development and understanding of the necessary network infrastructure to support the exchange of information among distributed learning parties.

-

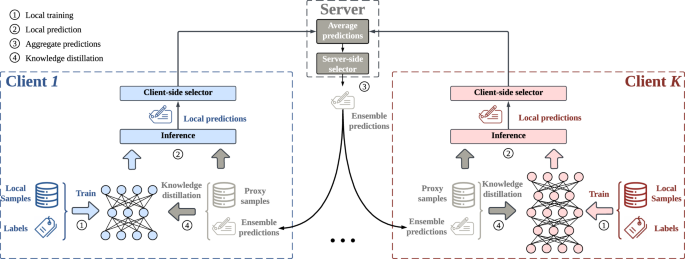

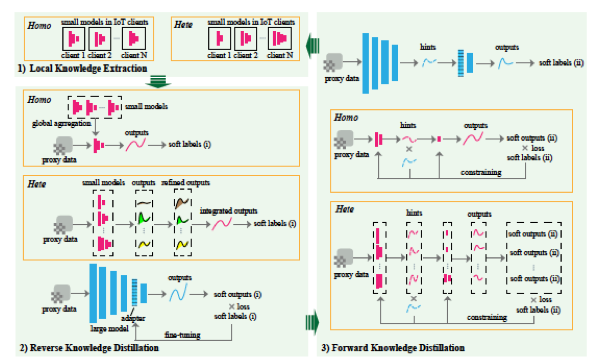

Effiective Knowledge Distillation for Collabrative Learning

PI: Dr. Marco Canini

Funding: None

Collaborators: Dr. Marco Canini (KAUST, KSA), and Dr. Ahmed M. A. Sayed (QMUL)

Assignees: Norah Alballa

This project seeks the development and understanding of the effiective methods for performaing knowledge distillation among cooperating distributed parties.

-

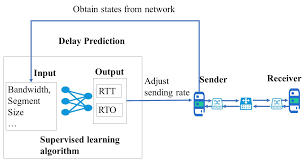

ML Congestion Control in SDN-based Heterogeneous Multi-Tenant Data Center Networks

PI: Dr. Brahim Bensaou

Funding: HK-GRF

Collaborators: Dr. Brahim Bensaou (HKUST, Hong Kong), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: Waheed Gameel

In this project is funded by HK General Research Fund (GRF) grant of 600K HKD, Dr. Ahmed co-investigates the project in collaboration with the PI at HKUST (Hong Kong). The project seeks the development and application of novel ML techniques to innovate the congestion control in in SDN-based Mutli-Tenant Data Centers.

-

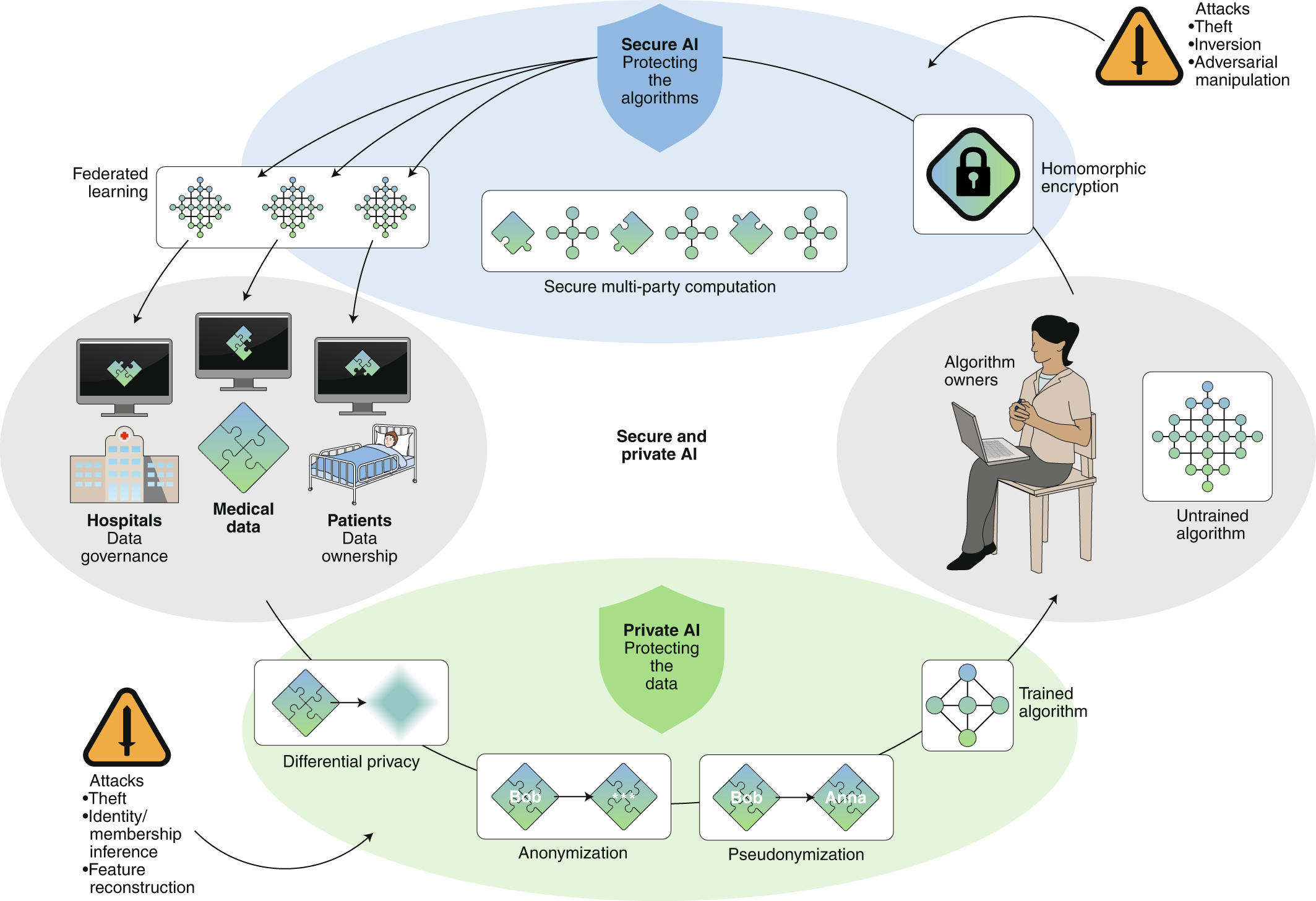

Privacy Enhancing Technologies for Federated Learning

PI: Dr. Ali Anwar

Funding: None

Collaborators: Dr. Ali Anwar (University of Minnesota, USA), Dr. Ahmed M. A. Sayed (QMUL), Dr. Arnab K. Paul (BITS Pilani)

Assignees: Sam Fountain, Xinran Wang, Yuvraj

We aim to conduct a comprehensive empirical performance analysis and optimization of the privacy-enhancing techniques or PETs (e.g., homomorphic encryption, differential privacy, and secure multi-party computation) for federated learning. The project aims to develop a complete framework for experimenting with various PETs in FL setting and present the metrics to evaluate the efficacy, performance and overhead of these methods.

-

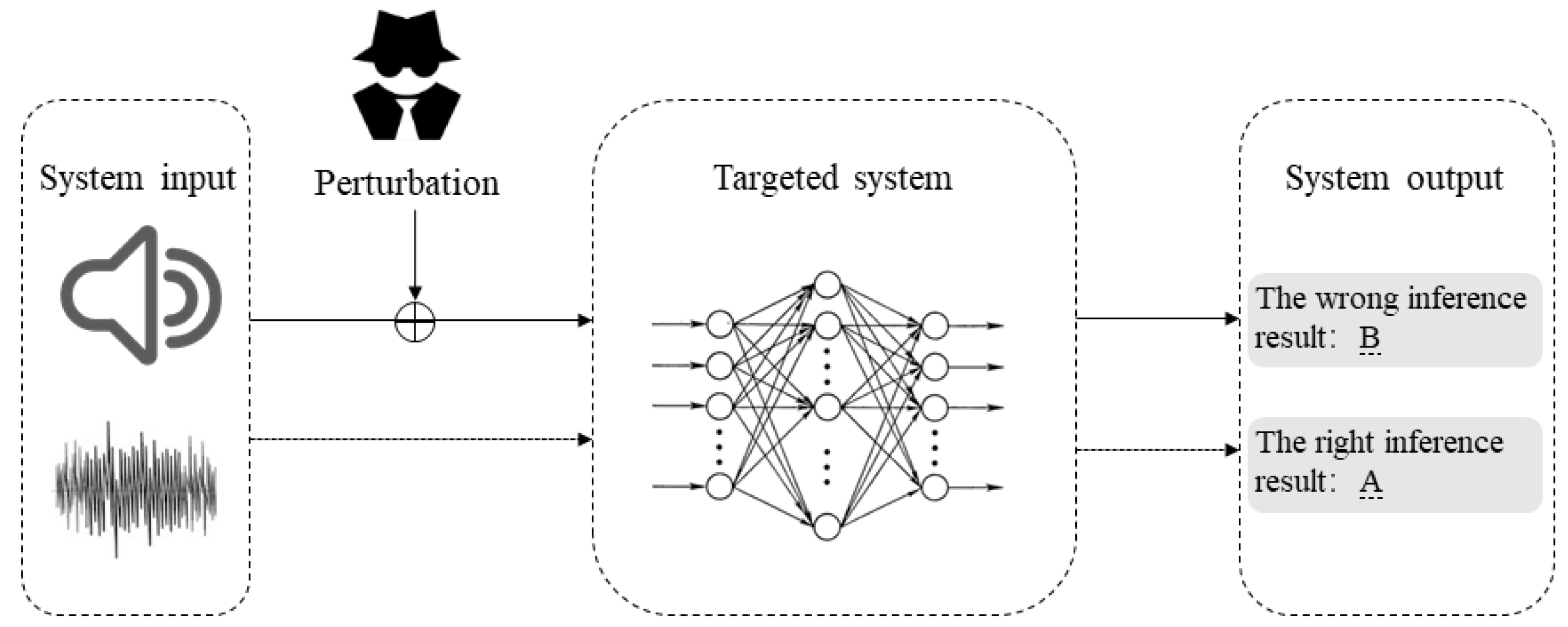

Adversarial Patterns and Countermeasures in Machine Learning

PI: Dr. Chen Wang

Funding: None

Collaborators: Dr. Chen Wang (Huazhong University of Science and Technology, China), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: None

We aim to to study adversarial patterns and countermeasures in machine learning. This project aims to investigate the vulnerabilities of machine learning models to adversarial attacks by exploring the creation and manipulation of input data to deceive these models. It involves analyzing adversarial patterns, such as perturbations or alterations in input data that lead to incorrect predictions, and devising robust countermeasures to enhance the models' resilience against such attacks. By understanding these vulnerabilities, the project seeks to develop novel defenses to fortify machine learning systems against adversarial manipulation, ensuring more reliable and secure AI applications across various domains.

-

Federated Knowledge Transfer Fine-tuning of Large Models on Resource Constrained Devices

PI: Dr. Linlin You

Funding: None

Collaborators: Dr. Linlin You (Sun Yat-Sen University, China), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: None

Federated Knowledge Transfer Fine-tuning (FKT-FT) enables the deployment and adaptation of large pre-trained models on resource-constrained devices in a federated setting. Instead of directly training the large model locally, this approach distills knowledge from a global model to a smaller, more efficient local model tailored to each device’s constraints. \ The process involves federated collaboration, where devices share updates of lightweight models or intermediate representations rather than raw data, preserving privacy and reducing communication overhead. By leveraging knowledge transfer techniques, FKT-FT ensures that devices benefit from the global model’s capabilities while optimizing for local hardware and data distribution limitations, enabling scalable and efficient deployment of AI in edge and IoT environments.

-

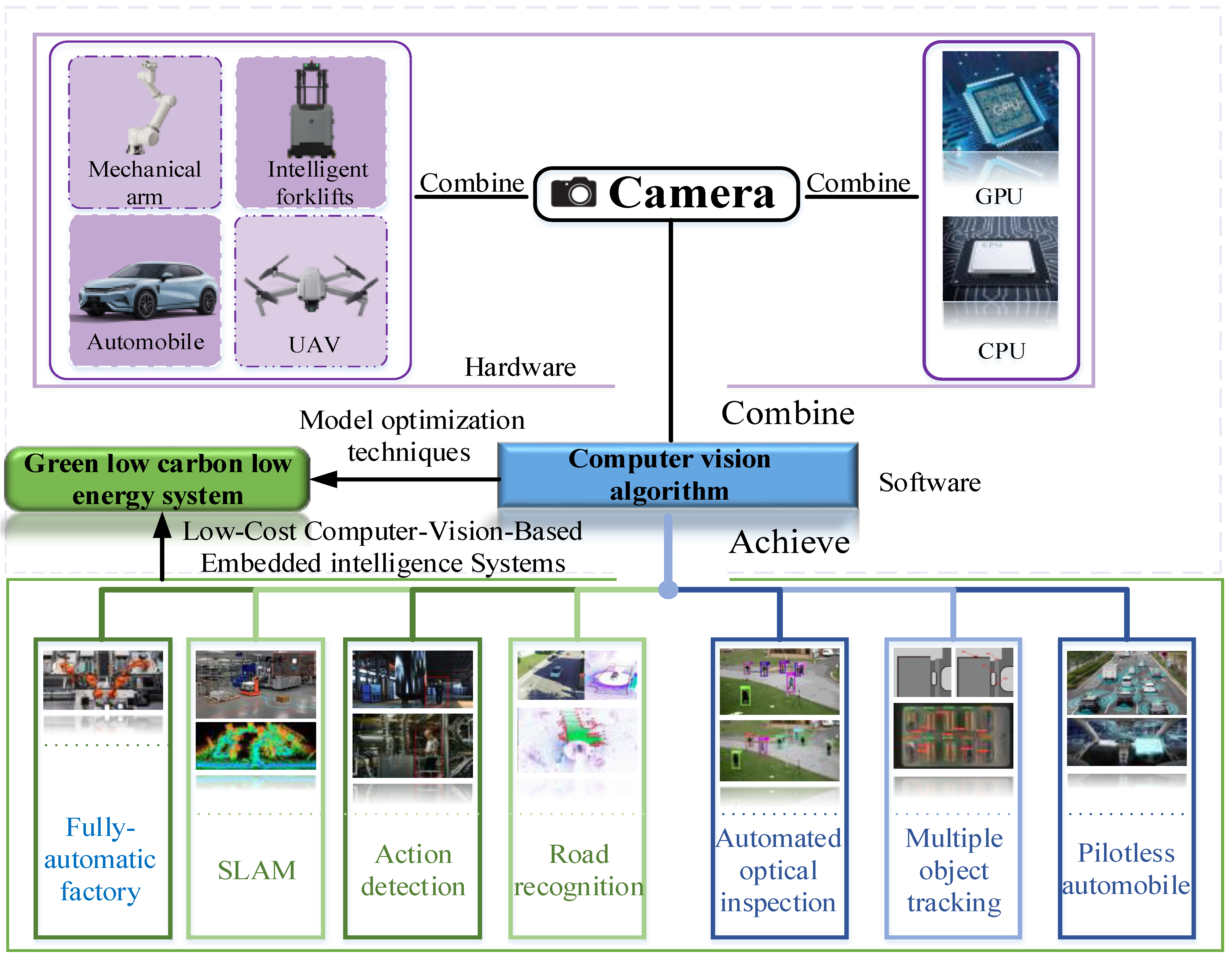

Efficient and Scalable Vision Models for Object ReIDentification

PI: Dr. Mingliang Gao

Funding: None

Collaborators: Dr. Mingliang Gao (Shandong University of Technology, China), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: None

This project aims to develop lightweight, high-performance models capable of identifying objects across diverse scenes and camera viewpoints while maintaining scalability for large-scale deployment. The project focuses on optimizing model architectures to balance accuracy and computational efficiency, incorporating techniques such as compact representation learning, knowledge distillation, and adaptive feature extraction. By leveraging scalable training pipelines and domain adaptation strategies, the approach ensures robust performance in real-world scenarios with heterogeneous data and resource constraints. The goal is to enable practical, cost-effective applications in areas such as surveillance, retail, and autonomous systems.

-

Optimizations for Efficient Computer Networks

Funding: None

Collaborators: Dr. Chen Tian (Nanjing University, China), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: None

This project is dedicated to fine-tuning and streamlining computer networks for enhanced performance and resource utilization. It involves an in-depth analysis of network traffic patterns, infrastructure configurations, and protocol efficiencies to identify areas for optimization. Through the implementation of advanced routing algorithms, load balancing techniques, and Quality of Service (QoS) mechanisms, the aim is to efficiently manage network resources, minimize latency, and maximize throughput. Additionally, the project explores the integration of emerging technologies such as Software-Defined Networking (SDN) or Network Function Virtualization (NFV) to enable more agile, programmable, and scalable networks. By leveraging these innovative approaches, the project seeks to create highly optimized computer networks that can adapt dynamically to varying demands, ensuring optimal performance and resource utilization across diverse network environments.

-

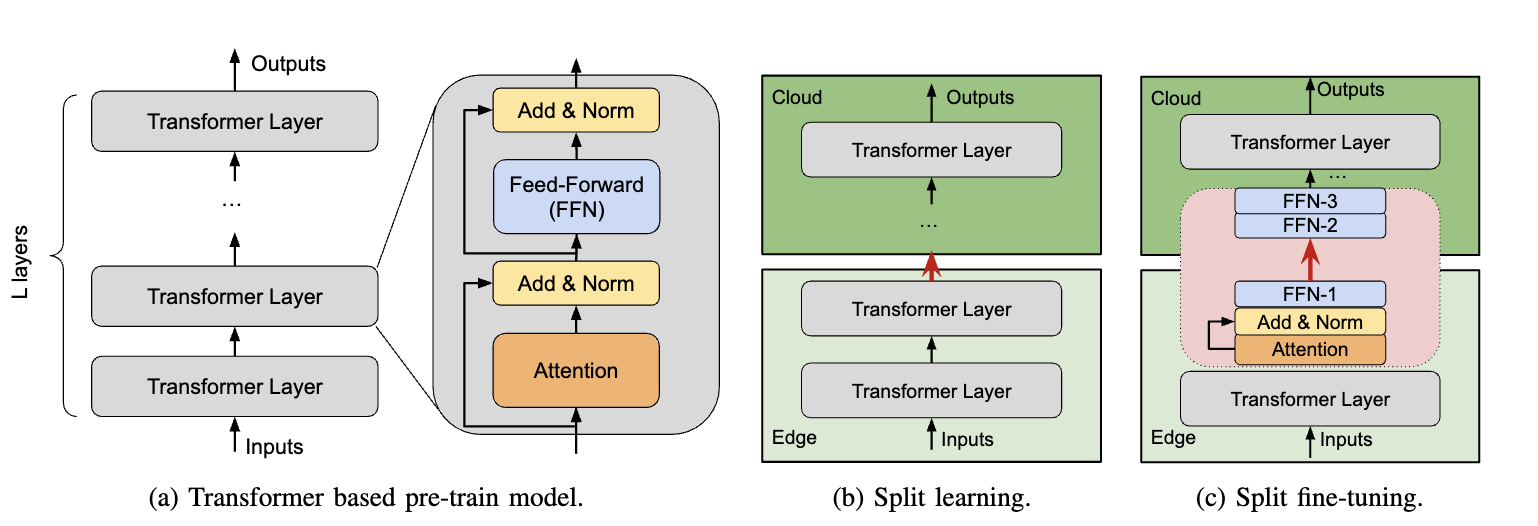

Split Fine-tuning Framework for Edge and Cloud Collaborative Learning

PI: Dr. Shaohuai Shi

Funding: None

Collaborators: Dr. Shaohuai Shi (Harbin Institute of Technology, China), Dr. Ahmed M. A. Sayed (QMUL)

Assignees: Qing Yang and Yang Xiang

To enable the pre-trained models to be fine-tuned with local data on edge devices without sharing data with the cloud, we design an efficient split fine-tuning (SFT) framework for edge and cloud collaborative learning. We propose three novel techniques in this framework. First, we propose a matrix decomposition-based method to compress the intermediate output of a neural network to reduce the communication volume between the edge device and the cloud server. Second, we eliminate particular links in the model without affecting the convergence performance in fine-tuning. Third, we implement our system atop PyTorch to allow users to easily extend their existing training scripts to enjoy efficient edge and cloud collaborative learning. The perliminary experimental results on 9 NLP datasets show that our framework can reduce the communication traffic by 96 times with little impact on the model accuracy.